Distributional’s product (DBNL) is designed to fit into your existing production AI stack. This means you can use DBNL without fear of lock-in and with minimal engineering overhead. Our Model Connections and Data Connections make it easy to integrate DBNL with whatever LMs you already have in place for your AI products and leverage the data you already have, wherever it may be, to start performing behavioral analytics on your agents right away.

This post focuses on Data Connections and, more specifically, examples for fitting your data to the DBNL Semantic Convention.

Data Connections

Distributional’s Data Connections include support for three approaches for DBNL to ingest production AI logs. Open Telemetry (OTEL) Trace Ingestion publishes Open Telemetry traces directly to DBNL as your agent runs. SDK Log Ingestion pushes data manually or as part of a daily orchestration job using the Python SDK. SQL Integration Ingestion pulls data from a SQL table into DBNL on a schedule.

We’ve also written a few adapters to conform your traces and spans to our Semantic Convention that will work with our SDK or your OTEL connections, which are included in this post below.

Semantic Convention

DBNL ingests data using traces produced by telemetry frameworks with different semantic conventions as well as tabular Logs with a user defined format. To compute Metrics and derive Insights consistently across different data ingestion formats, we define a Semantic Convention for the data uploaded to DBNL to get the richest possible analytics from your data.

If you are using OTEL Trace Ingestion and the OpenInference semantic convention, this happens automatically. If you are using SDK Log Ingestion or SQL Integration Ingestion, you need to ensure that your Column names adhere to our semantic convention for best results. In this post, we’ll explain a few adapters to conform your standard to fit with DBNL’s Semantic Convention.

Adapters for DBNL’s Semantic Convention

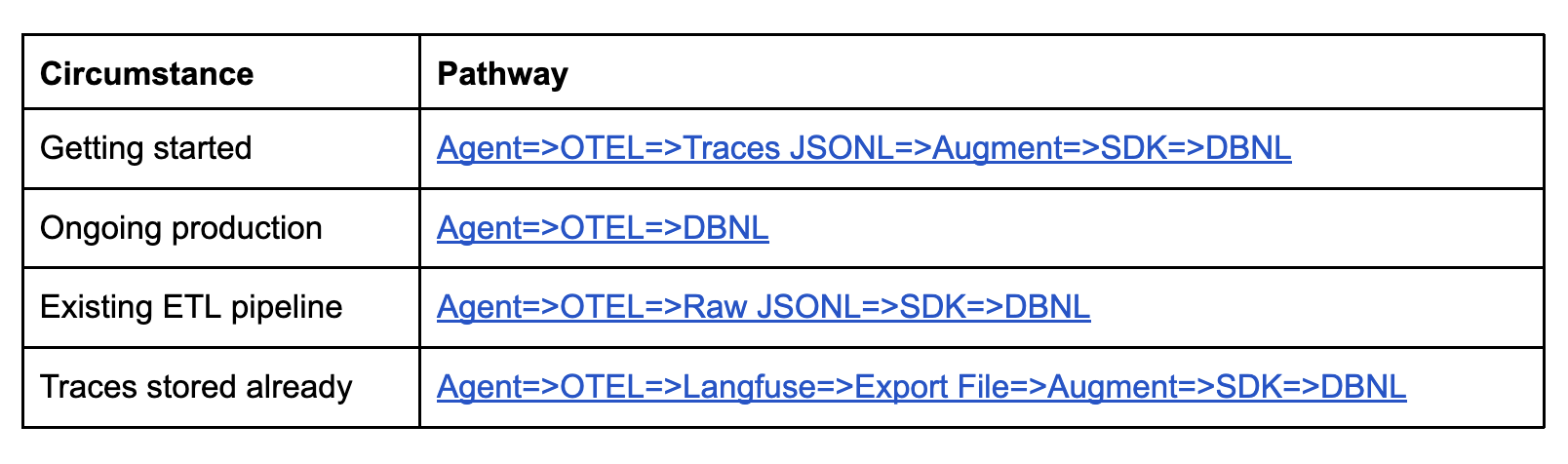

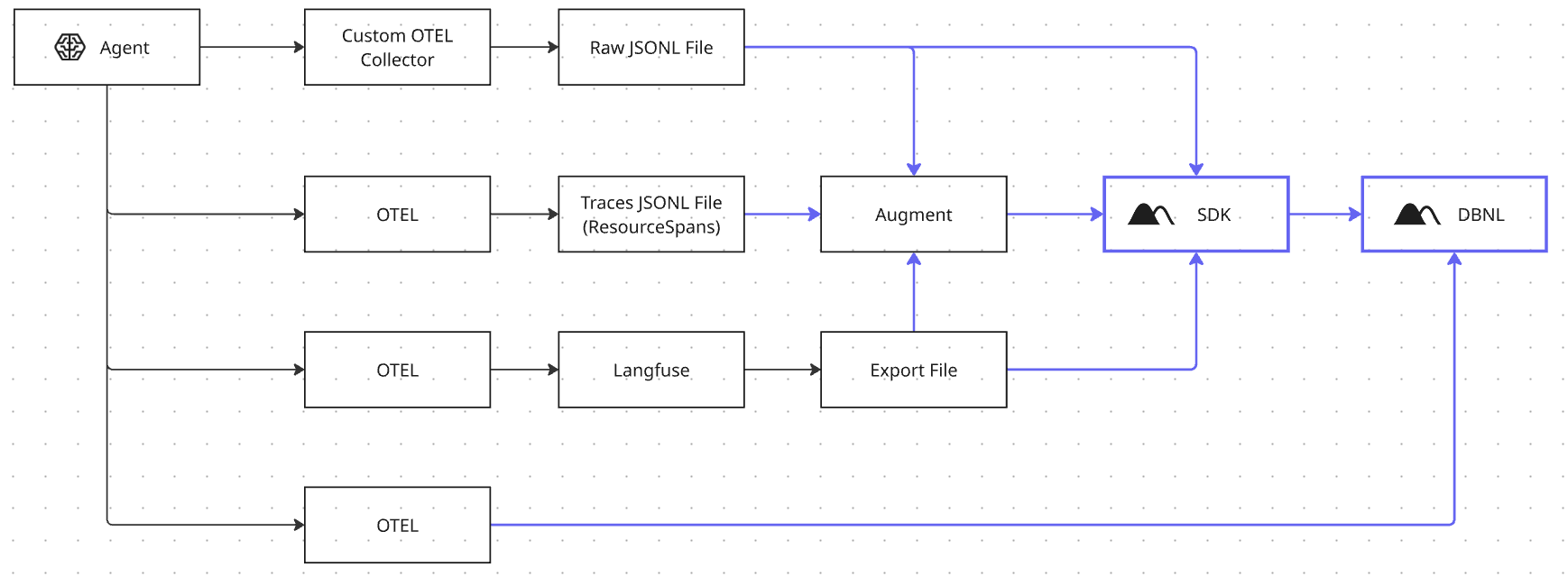

Below is a chart that shows pathways from your AI agent to DBNL Data Connections that maps to our Semantic Convention. We’ve also highlighted the recommended path.

You’ll notice that each of these pathways uses the OpenTelemetry standard. One of the pathways pushes traces directly from OTEL into DBNL, but we actually recommend instead taking steps to pass the data through our SDK so you can augment the data with rich fields potentially unavailable at span creation like user feedback, session information, or other data you can join after the fact. This richer data enables DBNL to produce more compelling analytics and provide deeper insight on AI agent usage, cost, quality, and speed.

Here is a quick overview of each pathway with a link to an example based on what we recommend given your circumstance.

And here is a short description of each:

- Agent => OTEL => Traces JSONL => Augment => SDK => DBNL: Use a local OTEL collector to write raw spans to file in the OpenInference semantic convention using resourceSpans, which are then easily augmented and uploaded to DBNL via the SDK. These can be converted to logs using `flatten_otlp_traces_data` and reported using `dbnl.log`.

- Agent => OTEL => DBNL: Send OTEL traces directly to DBNL without augmentation. This is the simplest path to getting data into DBNL and requires the fewest intermediate steps, storage, and computation. It requires the agent to send all required and useful information via OTEL directly, which may require extra instrumentation.

- Agent => OTEL => Raw JSONL => SDK => DBNL: Extract all of the information needed for the DBNL semantic convention, put it into a local jsonl file, load into a dataframe, and upload it to DBNL via the SDK.

- Agent => OTEL => Langfuse => Export File => Augment => SDK => DBNL: Convert Langfuse trace and observation export files into the DBNL Semantic Convention.

Finally, here is a graphic that may be easier to follow:

Get started today

This platform is designed to fit with your existing stack. It is deployed in your environment and no data leaves, so it is fully secure. It comes with a full set of enterprise features for administration, authentication, and data security. And it is built to scale with minimal engineering overhead. These adapters are an example of how we are constantly evolving our product to be even easier to use with your existing AI agent setup.

Distributional’s full service is open and free to use. Install DBNL today to experience these enterprise features yourself.

We are also always happy to learn more about your use case and enterprise needs, so reach out to nick-dbnl@distributional.com with any questions.