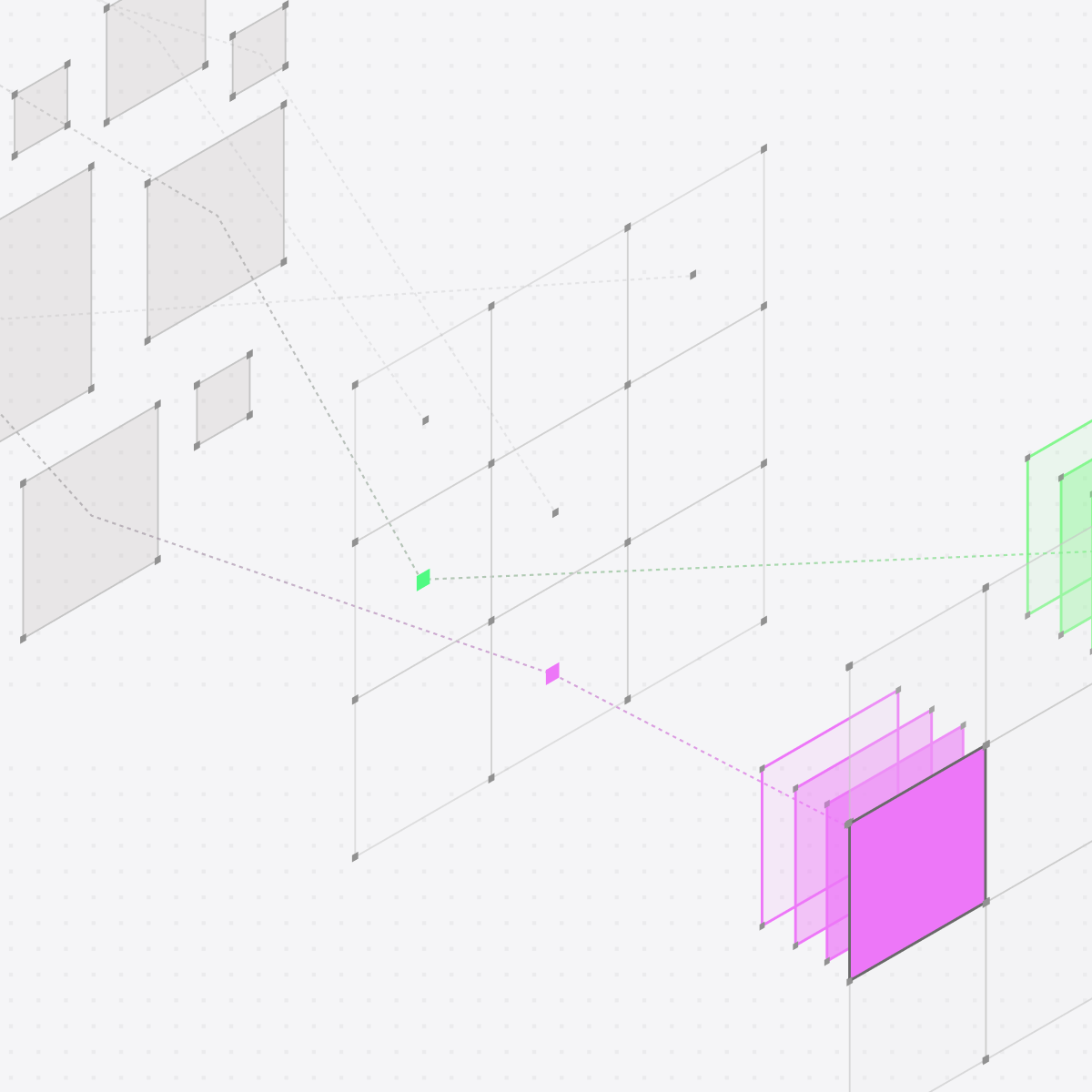

Distributional automates analysis of enriched production AI logs to surface interesting signals of AI product behavior—the interrelation of inputs, prompts, context, tools, model, and response. This product is composed of three components: platform, pipeline, and workflow. The platform enables you to deploy, administer, scale, and integrate our product seamlessly with your stack. The workflow empowers you to leverage insights to continuously improve your AI products. And the pipeline powers this workflow with automation of data analysis at scale.

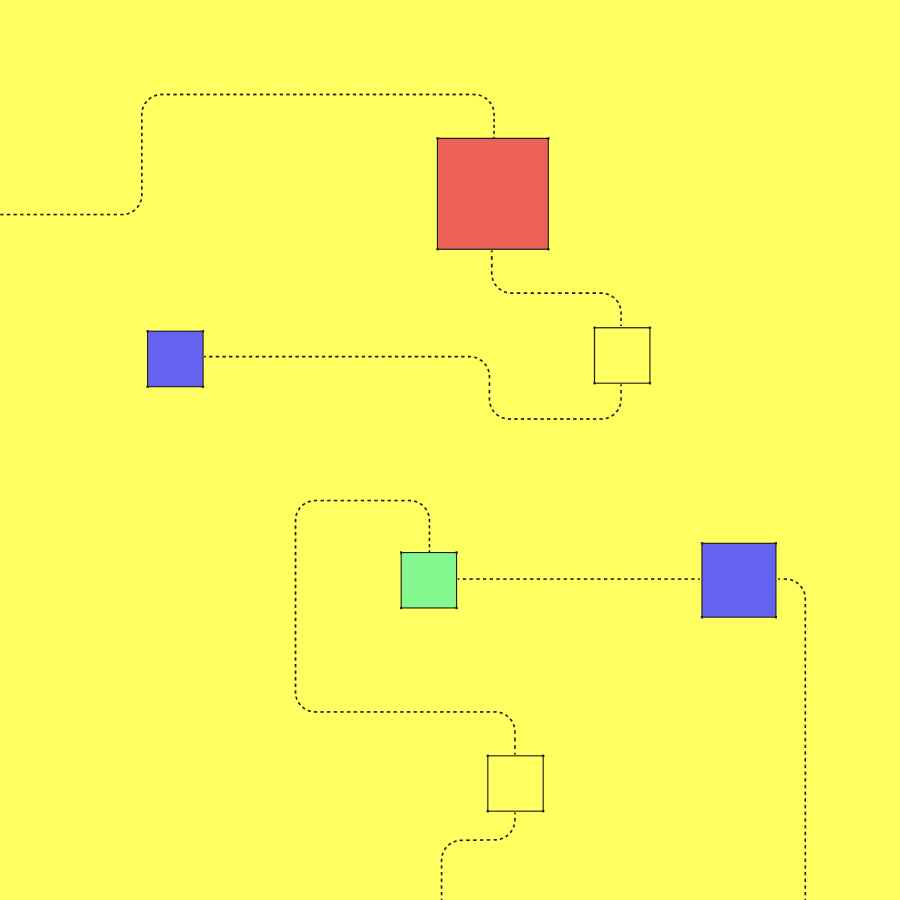

Our goal with this pipeline is to give your AI product team—including engineers, product managers, or data scientists—a daily starting point for deeper analysis regarding your AI products. This pipeline provides daily batch analysis of your production AI logs in four steps.

Enrich production AI logs

The first step in the pipeline is to augment raw, unstructured production AI logs into a variety of properties that can be parsed, clustered, analyzed, correlated, and judged. The pipeline automatically computes these properties off of your production AI logs. If you have metrics you already prefer from development, these can easily be added to give you a richer picture of behavior against performance expectations. The goal is to create as rich, deep, and broad a picture of behavior as possible so you don’t miss potential interesting signals.

Analyze enriched logs to uncover behavioral signals

This pipeline automatically runs daily analysis of these enriched AI product logs to tease out interesting signals on AI product behavior. This pipeline applies a variety of techniques for this analysis. The pipeline classifies each trace according to topics that our pipeline has learned and defined for your team. It clusters distributions of these properties according to a variety of conventions, and correlates these clusters to identify any interesting, unexpected, or anomalous connection between properties. It runs an efficient LLM-as-judge on inputs and responses, and provides insight to any that violate a previously defined threshold or have drifted from prior behavior. And the pipeline applies statistical tests to detect any change in the distributions of these metrics or properties.

Publish signals to your dashboard and channels

All of this analysis is formulated into human readable signals with evidence linking to charts and logs that support the claim. When previously defined user thresholds are violated, alerts are triggered as well. These signals and alerts are automatically published to your team’s dashboard hosted by the Distributional service so there is a central, standard place for any extended team member to view this information in historical context. These signals and alerts are also published to configurable notification channels so they appear in your inbox or Slack channel each morning to start your day.

Adapt future analysis to your preferences

As you interact with these signals, Distributional makes it easy to convert them into metrics with thresholds. When you take these actions, Distributional learns these preferences, and future analysis performed by the pipeline will reinforce these choices over time.

Try it today

This pipeline automatically arms your team with a fresh set of daily signals on your AI products. These signals help your team continuously improve these products. The pipeline is designed to run this unsupervised analysis on 100% of your production AI logs at scale so don’t miss any interesting signals. And it works with minimal effort from your team, so you get these signals “for free” while your team works on new AI features.

Distributional’s full service is open and free to use. Try it today to experience these enterprise features yourself. We are also always happy to learn more about your use case and enterprise needs, so reach out to nick-dbnl@distributional.com with any questions.