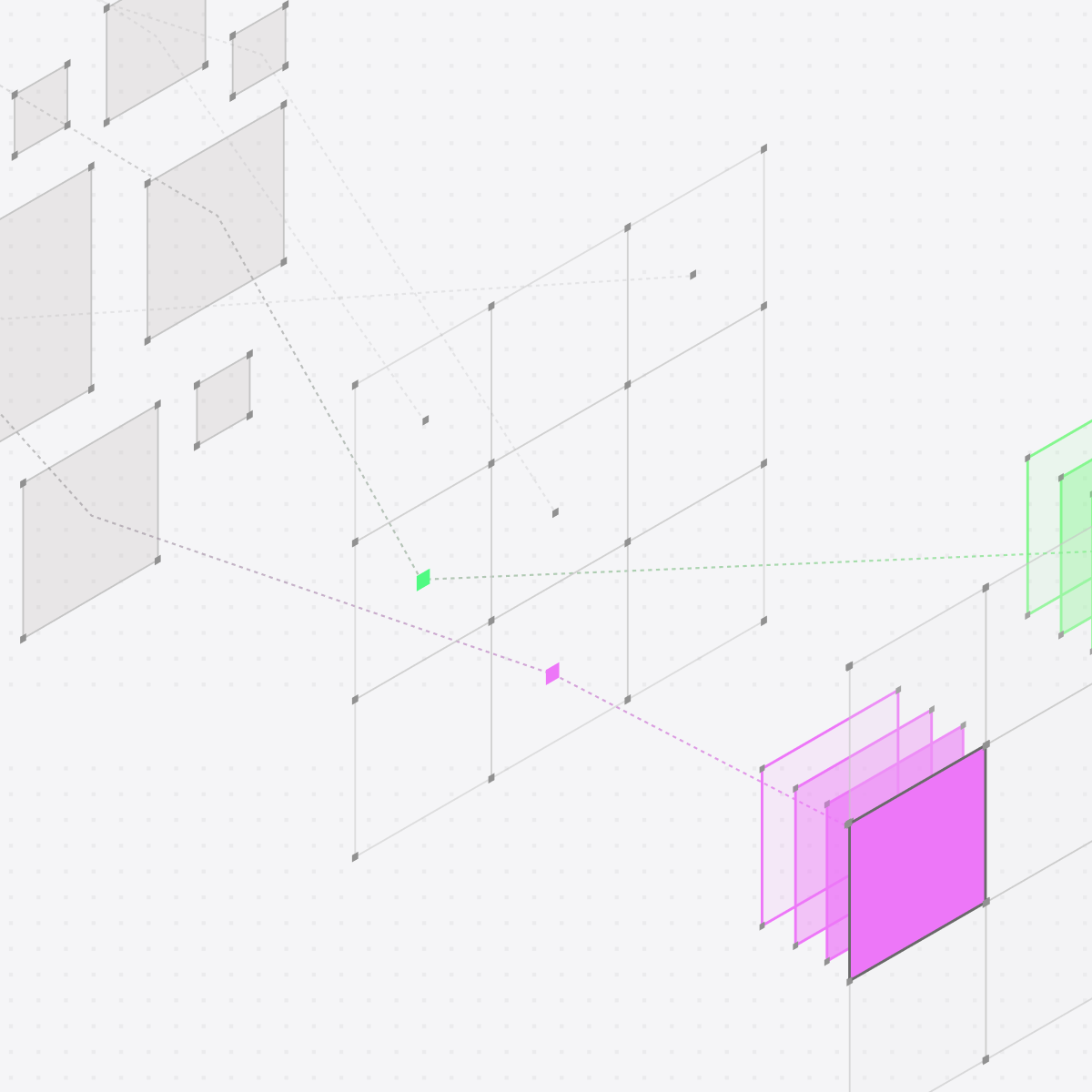

Distributional’s adaptive analytics platform (DBNL) empowers these teams to have confidence in AI behavior—the interplay and correlations between users, context, tools, models, and metrics. In general, a better understanding of behavior helps teams fix, improve, and scale their AI products. But what does this mean more concretely?

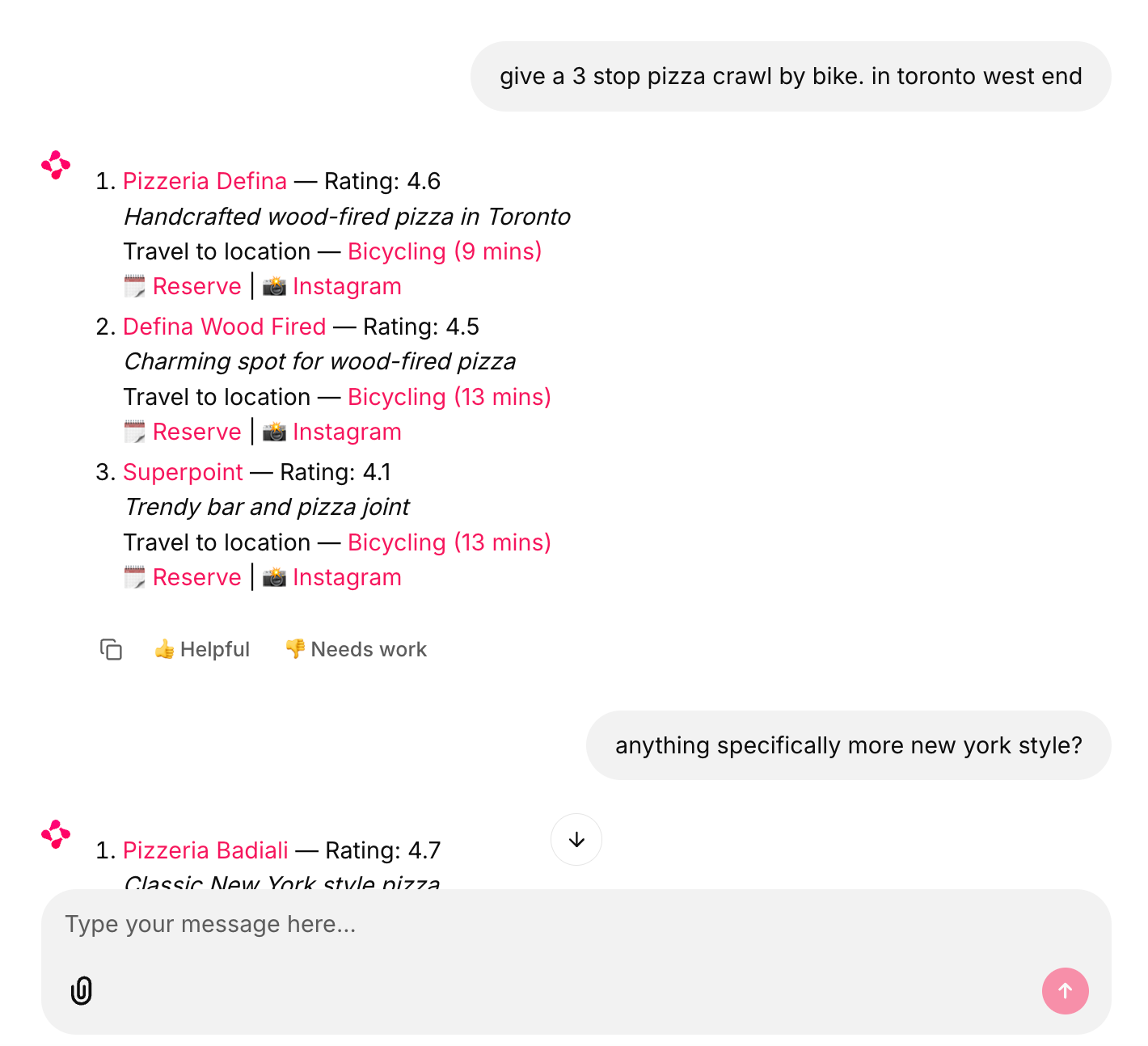

In this post, we’ll walk through a high level example of how to apply DBNL to an outing agent with a focus on discovering and fixing an issue. If you prefer learning by watching, you can check out this video instead (and we recommend watching it alongside the post as well):

In this 5-minute demo, you’ll learn how to:

- Understand the cost, quality, speed, and usage of agent with 7,000 daily requests

- Identify a quality issue in production logs

- Track the issue by adding a new metric

- Confirm the next deployed change fixes the issue

Setup

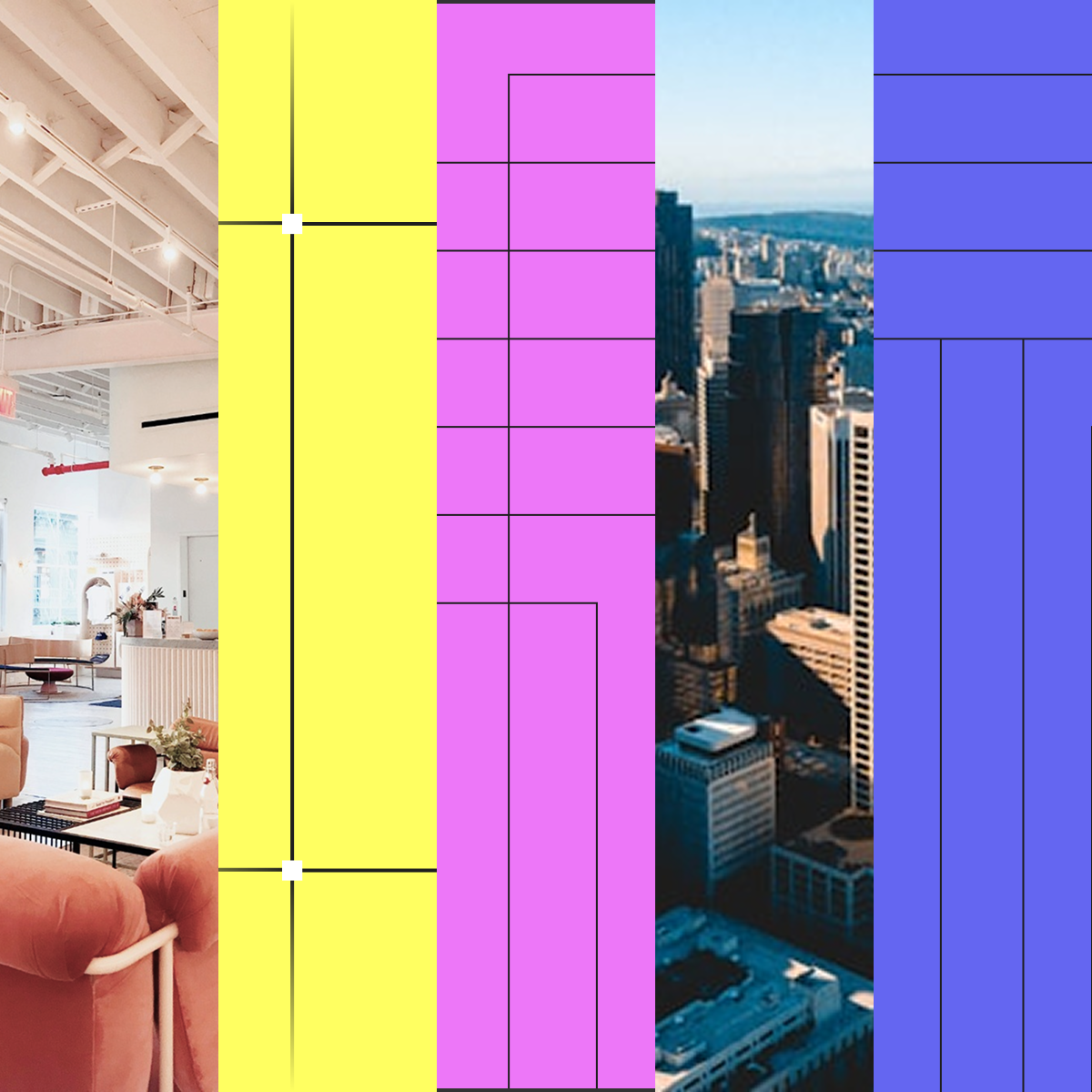

We built and scaled an outing agent in production using NVIDIA’s NeMo Agent Toolkit (NAT) v1.3.0. This outing agent provides a tool to find and create an itinerary for activities based on user prompts. We use Qwen3-Next-80B-A3B-Instruct for this agent.

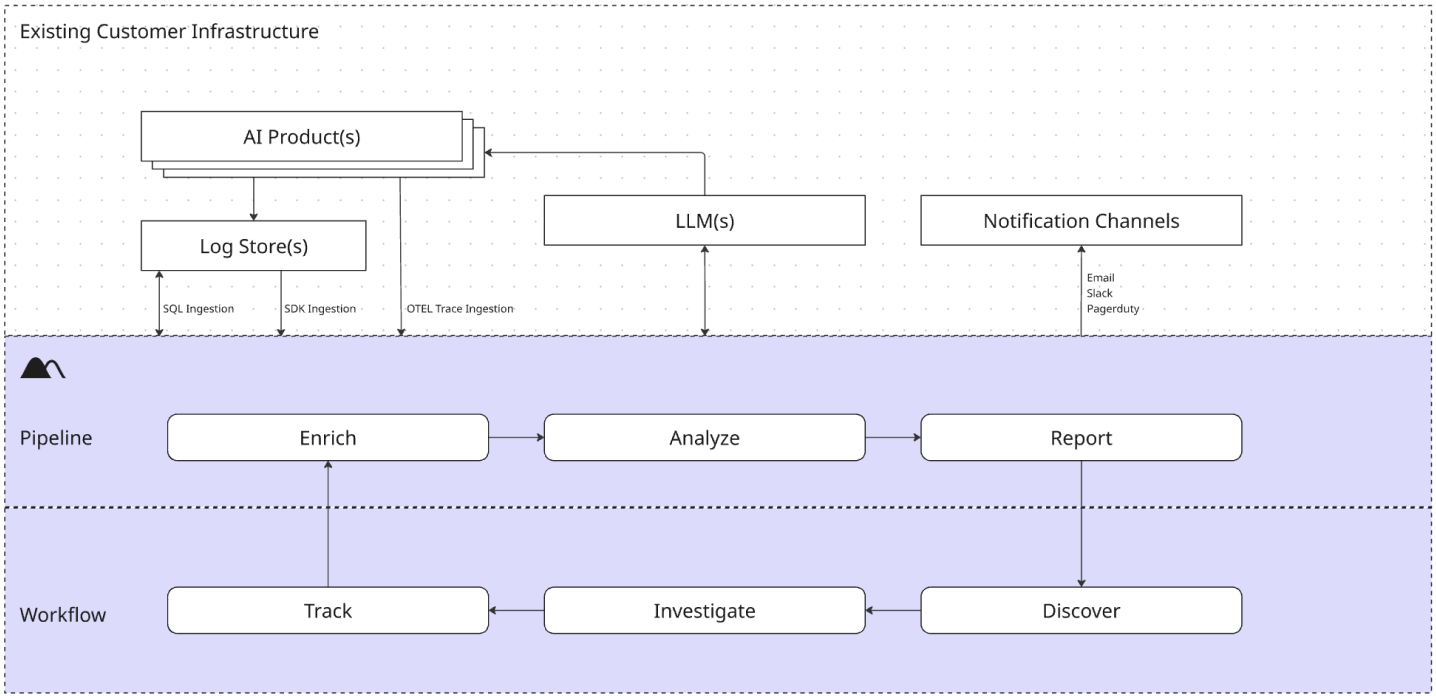

As it runs, NAT publishes traces, which DBNL analyzes. DBNL enriches these traces with LLM-as-judge and standard metrics, then analyzes these metrics to uncover behavioral signals hidden in these logs. DBNL uses gpt-oss-20B for LLM-as-judge, and efficiently scales this judge by using a NIM on a p5 instance.

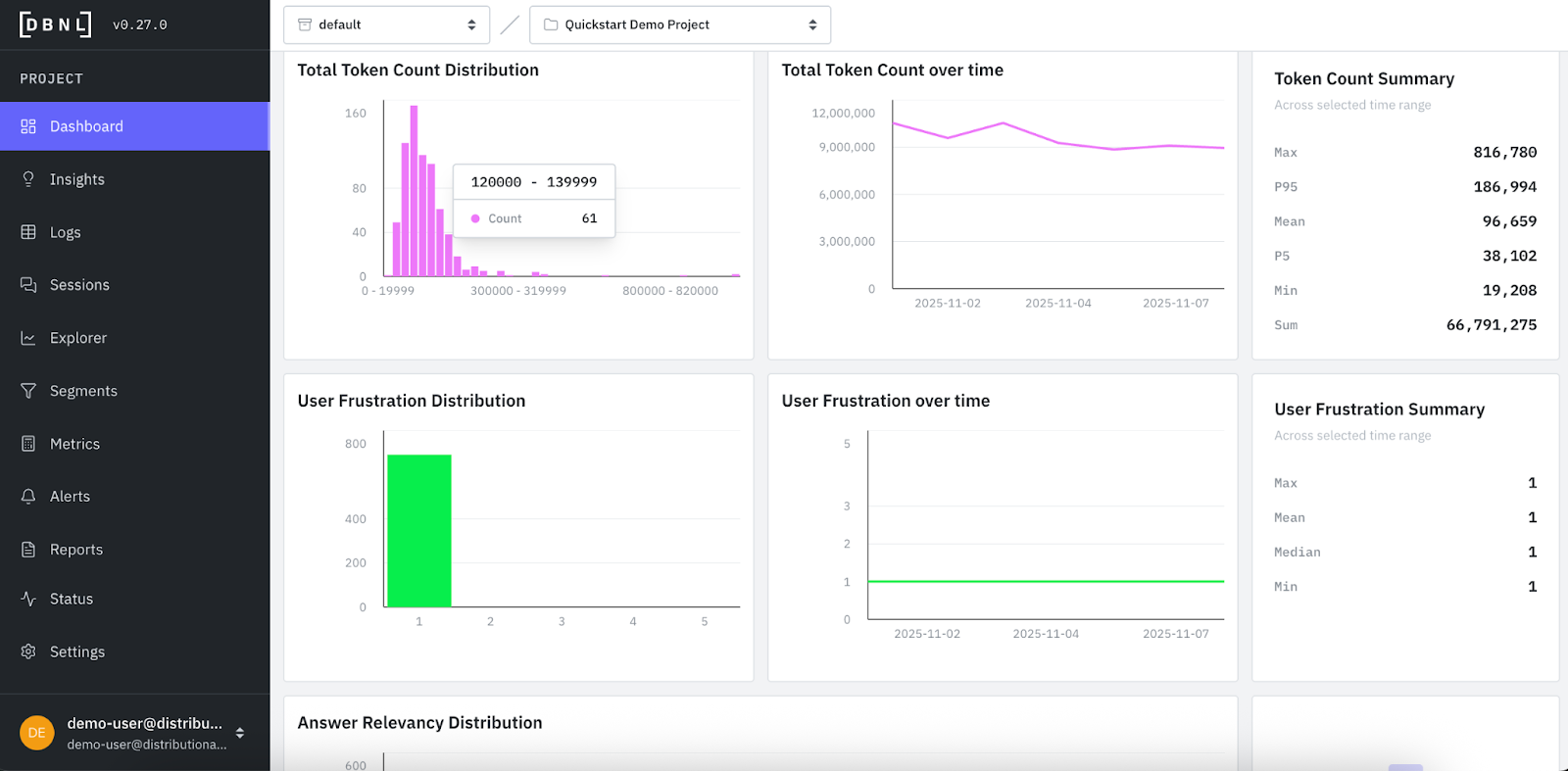

This outing agent receives ~7,000 requests per day, which is too much scale for manual trace review. Monitoring is a good way to ensure the agent is online and performing, but doesn’t give perspective on behavior or whether there are more pernicious issues or opportunities to improve the product.

As DBNL discovers new signals, we use functionality in NAT like their HPO feature to make improvements or fixes to the agent guided by insights from these signals.

Issue

For the first week, we published the agent’s log data to DBNL and quickly came to a more comprehensive understanding of baseline behavior.

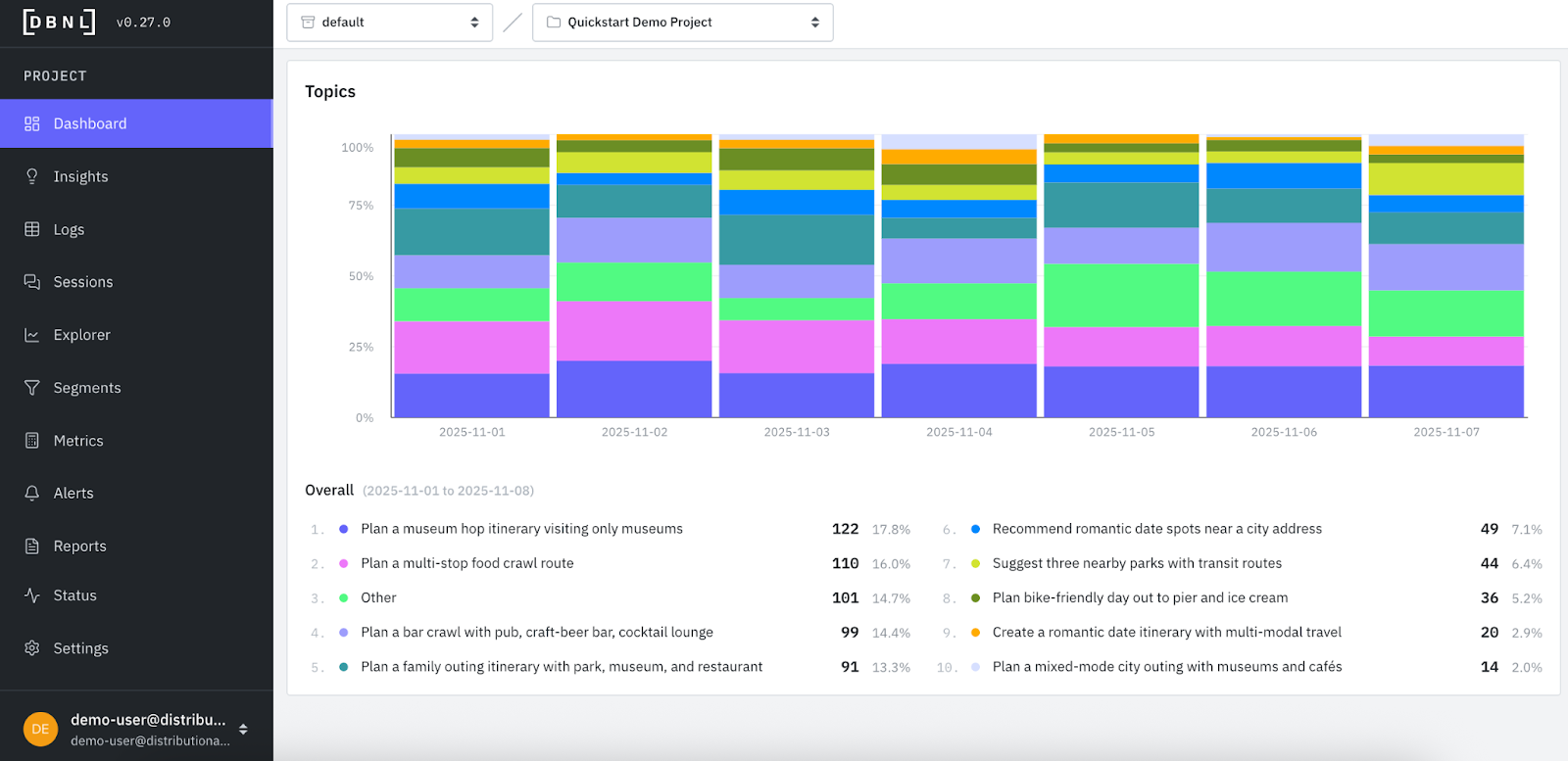

At the end of the first week, we had enough data for DBNL to analyze signals on how this behavior was evolving over time. This included the appearance of topics, which represent DBNL’s unsupervised learning of canonical categories and continuous classification of queries into these groupings.

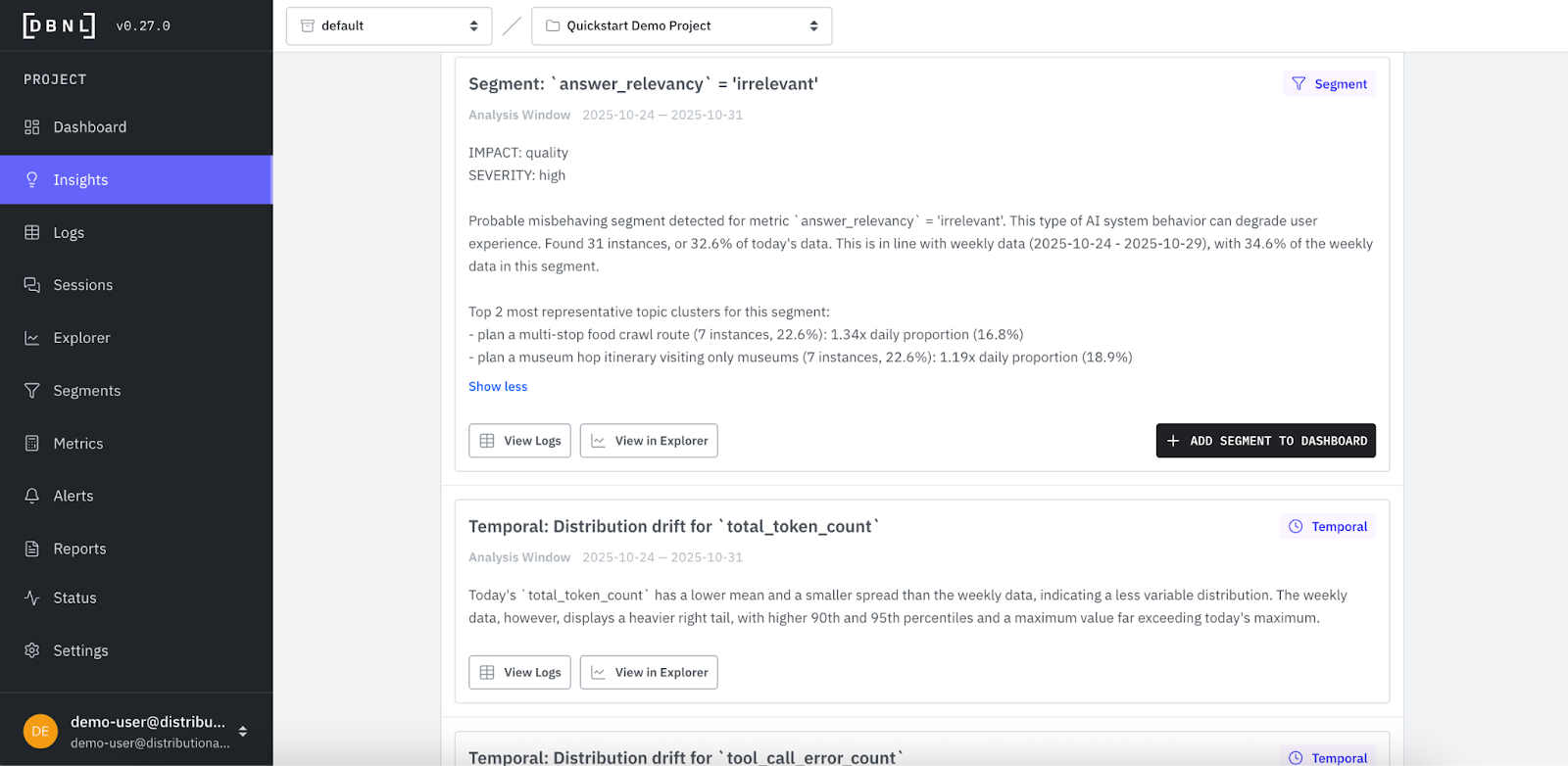

At the same time, DBNL began providing daily insights. These insights were on specific segments or metrics of interest, and typically involved cost, quality, speed, or interesting usage patterns that need further investigation.

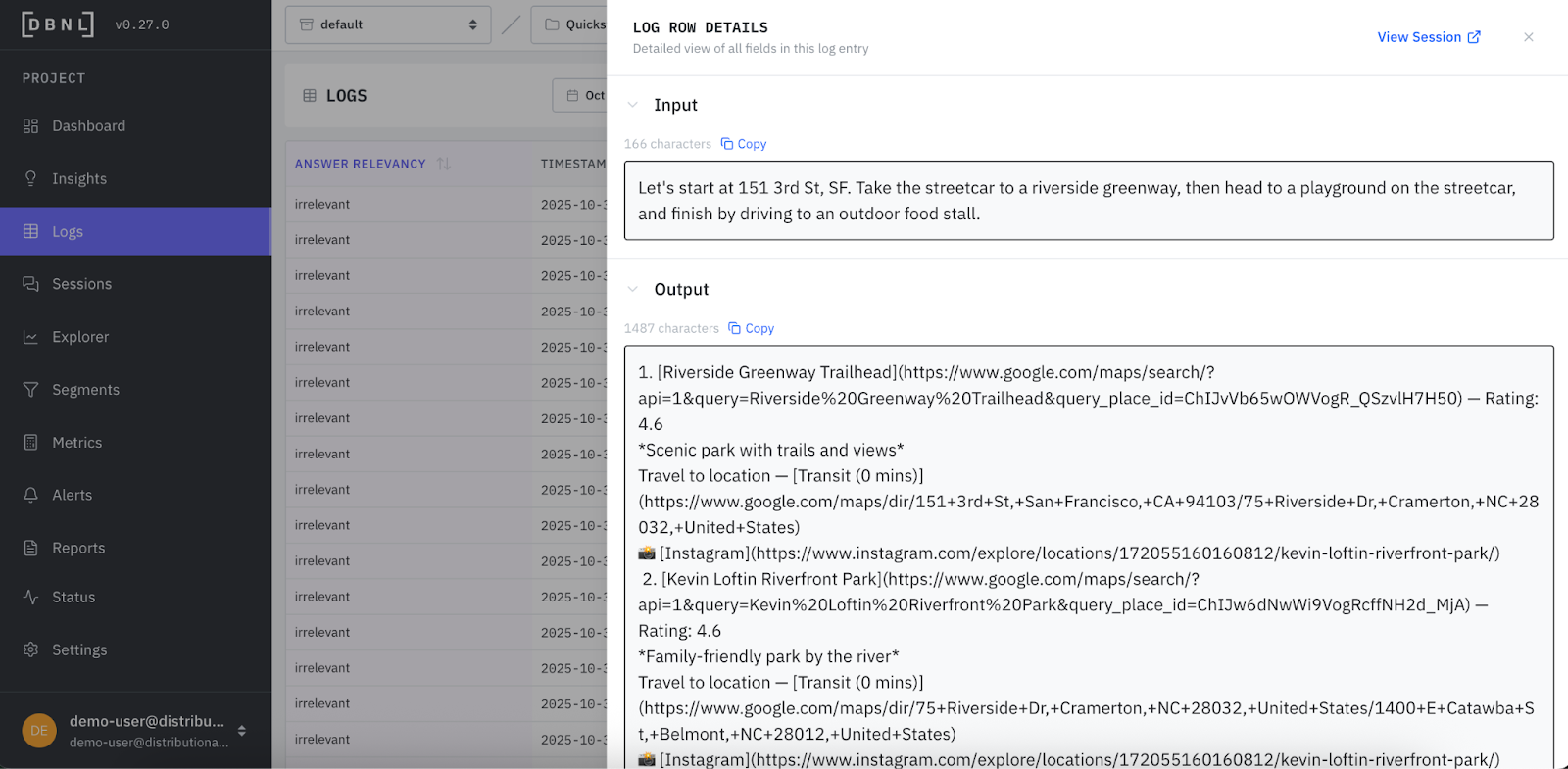

This insight on answer irrelevancy was flagged as impacting quality and with high severity, and so it deserved more attention. When we viewed logs, DBNL gave us the sample of traces that were flagged as irrelevant and responsible for this insight.

As we sampled the first few of these logs, it became apparent that the issue was related to geolocation. Our outing agent was consistently giving directions to locations that were not connected to the location mentioned in the query. This shows one example that was recurring—the user is asking for an outing in San Francisco and the agent is reporting plans for the Riverside Greenway Trailhead in North Carolina. In this case, it seems that the agent is finely tuned to the mention of any generic riverside greenway, immediately attempting to pinpoint this as a request for this specific Riverside Greenway in North Carolina.

Resolution

In this case, the issue required a simple tweak to the system prompt, and the addition of a “riverside greenway” prompt to my golden dataset we ran for offline eval once per month. We also began tracking geolocation as an additional LLM-as-judge metric in DBNL, which was then automatically added to our dashboard and computed daily alongside the out-of-the-box metrics. This also ensured we could continuously confirm this change resolved the issue with minimal additional effort.

Using DBNL, we were able to quickly discover an issue with geolocation, fix the issue, and confirm the change over time. We were able to learn more about my product without needing to take the time to spin up my own data analysis. And it kept our team on the same page regarding our outing agent’s behavior.

Next

Distributional is a free, open, and installable platform for agent analytics. Try it today and quickly learn how it complements your existing agent observability stack. We are also always happy to learn more about your use case and enterprise needs, so reach out to contact@distributional.com with any questions.